Authors:

(1) Xiaofan Yu, University of California San Diego, La Jolla, California, USA ([email protected]);

(2) Anthony Thomas, University of California San Diego, La Jolla, California, USA ([email protected]);

(3) Ivannia Gomez Moreno, CETYS University, Campus Tijuana, Tijuana, Mexico ([email protected]);

(4) Louis Gutierrez, University of California San Diego, La Jolla, California, USA ([email protected]);

(5) Tajana Šimunić Rosing, University of California San Diego, La Jolla, USA ([email protected]).

Table of Links

8 Evaluation of LifeHD semi and LifeHDa

9 Discussions and Future Works

10 Conclusion, Acknowledgments, and References

8 EVALUATION OF LIFEHDsemi AND LIFEHDa

In this section, we compare LifeHDsemi and LifeHDa, our proposed extensions from LifeHD, with existing designs that are similar.

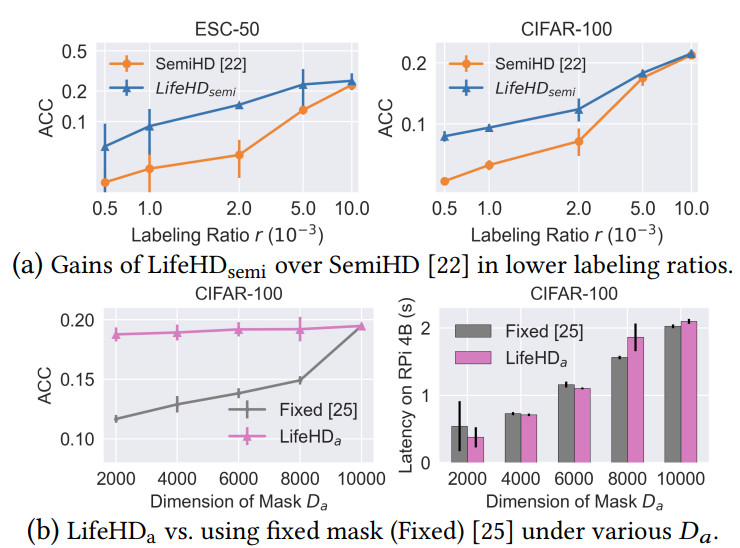

Performance of LifeHDsemi. To evaluate LifeHDsemi in a lowlabel scenario, we compare it with SemiHD [22], which is the stateof-the-art HDC method for semi-supervised learning. We adapt SemiHD [22] for single-pass settings, introducing a pseudolabel assignment threshold. When the cosine similarity of an unlabeled sample to the nearest class hypervector surpasses the threshold, we assign that class as its pseudolabel. The sample is then employed to update the class hypervector in SemiHD. We explore various threshold values and choose the optimal result for comparison. Fig. 15 (a) compares LifeHDsemi and SemiHD [22] on ESC-50 and CIFAR100 across various labeling ratios 𝑟 < 0.01. The advantages of LifeHDsemi are most prominent when labels are limited, the weakly supervised scenario is LifeHDsemi’s major focus. LifeHDsemi improves ACC by up to 10.25% and 3.6% on ESC-50 and CIFAR-100 respectively. This outcome arises from the unsupervised nature of LifeHD, allowing it to autonomously organize prominent cluster HVs, especially when all samples from a class lack labels. As the labeling ratio increases, LifeHDsemi’s advantage over SemiHD diminishes, because more labels bolster SemiHD’s performance.

Performance of LifeHDa. LifeHDa provides an interface to trade minimal performance loss for efficiency gains, by adaptively pruning out the insignificant dimensions. We compare LifeHDa with previous HDC works employing a fixed mask throughout training [25], and the results are presented in Fig. 15 (b) for CIFAR100, including ACC and training latency per batch on RPi 4B. Fixed masks negatively impact HDC learning, especially with smaller dimensions. Such masks fail to adapt to new hypervectors in classincremental streams, where less significant dimensions may become crucial later in training. LifeHDa addresses this issue by adjusting the mask upon novelty detection, leading to a degradation of only 0.71% in ACC and 4.5x efficiency gain compared to the complete LifeHD, using only 20% of the full HD dimension of LifeHD. The overhead of adaptively adjusting the mask is negligible when novelty detection occurs infrequently.

This paper is available on arxiv under CC BY-NC-SA 4.0 DEED license.